AI Deepfake Detector Tool Measures Reflection in the Eyes

The new tool, developed by computer scientists from the University of Buffalo, is a relatively simple system. Yet it has proved to be 94% effective in detecting deepfake content. For the uninitiated, deepfake images and videos are generated by AI-based apps that analyze thousands of photos and videos of different real-life individuals. Following their analysis, the tools can then create realistic-looking images and videos of a person that does not even exist. Moreover, they can be used to generate fake faces of popular celebrities to spread misinformation and fool the masses online. So, to generate the deepfake results, the AI tools analyze the various resources to understand how a person move, smile, or frown. They can then use the information to intelligently create digital clones of other individuals that look highly-realistic.

How Does it Work?

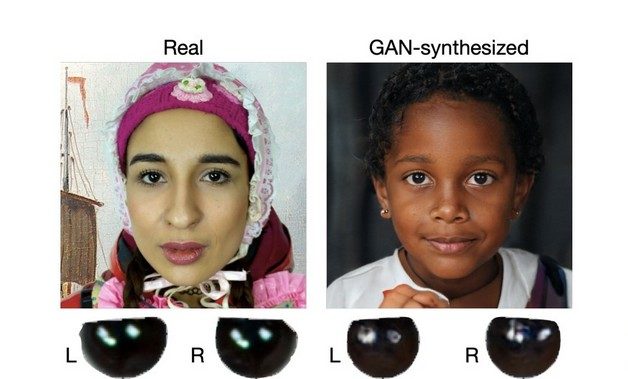

Now, general deepfake detection tools analyze content to find any unnatural factors such as irregular facial movements or excessive skin smoothening. The new tool, however, relies on the reflection of light in the subjects’ eyes to detect deepfake images and videos. As per the scientists, this is because the reflection of a light source on a subject’s eyes is something an AI-based tool does not consider while generating deepfake content. As a result, the eyes in deepfake images depict different reflections on each of the corneas whereas it should be the same on both the eyes if the subject is real. You can check out a comparison image right below to better understand it.

The scientists add that the deepfake generating tools also do not know completely understand the human anatomy and how certain elements changes upon interaction with the physical world. The reflection in the eyes is one of those factors that change depending on the light source in front of the subject at the time of shooting an image or a video. So, this deepfake tool takes advantage of this inconsistency in deepfake content to detect them successfully. It analyzes an image and generates a similarity-metric score. A high similarity metric-score means that the image is unlikely to be a deepfake, while a low score means a higher chance of the image being a fake one.

The Known Issues

Now, it is worth mentioning that the tool has some issues of its own. For instance, it does not work if a subject is not looking at the camera or one eye of the subject is not visible in the image. The scientists, however, hope to eliminate these issues with further development of the tool in the coming days. As of now, the tool can easily detect less sophisticated deepfake media. For higher-quality deepfake content, however, the tool might struggle a bit and can be inaccurate.